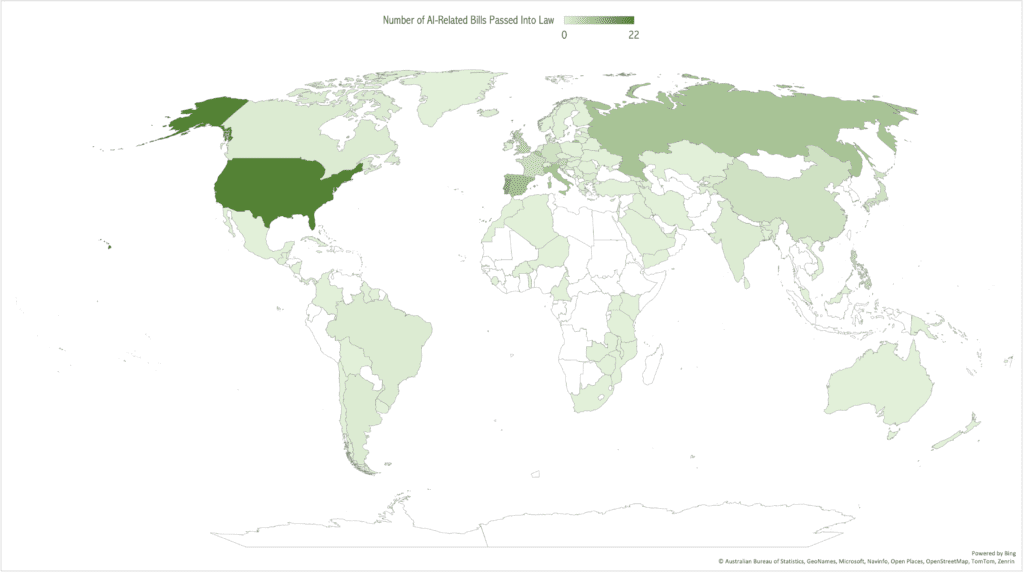

Multiple reports show the growing interest among policymakers to regulate artificial intelligence. The number of bills that mention “artificial intelligence” grew from 1 in 2016 to 37 in 2022. The United States is leading the race with 9 bills mentioned “artificial intelligence” in 2022 alone. Although the rest of the world is fast catching up, especially with the EU’s DSA and AI Act, which skews towards more prescriptive requirements, there is no common understanding of what specifically should be regulated, what the guardrails are and where to draw the line between enabling innovation while mitigating risks of bias and harm. Global South countries in Asia and Africa are either lagging behind or having knee-jerk reactions to impose poorly articulated restrictions that will likely impede technological growth and people’s access to information. In April, Italy banned, then unbanned OpenAI’s ChatGPT in the span of 28 days. Many schools and universities in India have already banned ChatGPT.

The concerns aren’t totally unfounded, however are misguided. Much of this can be attributed to the significant information asymmetry that exists between Global North and South countries. Majority of existing research on AI ethics and accountability are produced in high-income countries with little attention to the regional variations in rest of the world, rendering them ineffective or incomplete. Policymakers, businesses and academics in low- and middle-income countries do not have the right tools and resources to determine the efficacy of large language models (LLMs) nor auditing training data to evaluate their bias. Despite the fact that GPT 3.5 is now available to the world, there is currently negligible or no investment in research to understand to what extent training data are fit for non-Western perspectives and whether they are perpetuating existing social and economic inequalities. In absence of a contextual yet objective framework, national AI strategies and rushed AI legislations are throttling the positive growth of artificial intelligence in many countries.

At Tech Global Institute, we are working on research and audits of the most popular and publicly accessible LLMs with an aim to develop a regionally nuanced yet objectives assessment toolkit. We are engaging policymakers, research scientists, end users and businesses across South Asia to better understand the gaps and what cost-effective but effective accountability could look like. What principles need to be followed? What are the minimum controls that AGI-backed solutions need to have to mitigate risks of bias and harm? What is the right balance between transparency, privacy and trade secrets? These questions are not unique to Global South countries. However, the approach to addressing them while bearing the political and policy contexts in low- and middle-income countries will be critical to ensure underrepresented regions are not further left behind.

We plan to release parts of our research on AI Assessment Toolkit in late July.